So I built a server... Its name is Titan. My storage situation was becoming unwieldy. It consisted of seven bare drives and a hot swap bay. Backups were infrequent because the hassle of inserting the right bare drive for each backup kept backups to only an "as needed basis" with regular backups only once a month at best and sometimes even once every three months. Some data was lost. Additionally, my main computer's internal storage is a 2-drive RAID0 striped 8TB volume. I didn't even have an 8TB bare drive to back up this volume entirely. In total, I have roughly 12TB of personal data which isn't too bad for a videographer. However, as that storage requirement continues to grow it is clear that bare hard drives were no longer cutting it for backup. It was for this reason that I decided to finally tackle the problem. The solution would be a server with a large hard drive array to make management of digital storage more convenient.

The Solution

For a primarily family storage solution, a traditional RAID would create unnecessary hassles when expanding the array and would result in less storage security. UnRAID server proved to be a perfect solution during its initial free trial. UnRAID is similar to RAID in that you have parity protection, but unlike traditional RAID, data is not striped. It is sort of like JBOD with parity, except SMB shares are user shares such as "Family Photos" that can span multiple disks instead of simply exporting the disks separately. From the user perspective, you simply write to the "Family Photos" disk and the unRAID server manages which disks from the "Family Photos" pool to write new data to.

The data in the server is a combination of PC backups and primary storage for family photos and video. It also serves as a personal cloud and Plex media server for OTA DVR recordings and the BluRay movies that I own. Directly editing video from the server was not in consideration and thus I/O performance greater than 100MB/s was not a priority. Therefore, the benefits of a striped storage system would not have added value, and would not be the best tool for this use case. It should also be noted that the server would use standard gigabit networking and thus the 100MB/s throughput of gigabit ethernet can easily be saturated with single-drive performance. To really gain benefit from RAID striping, using 10Gbit networking is preferred. Hopefully, it trickles down to the consumer market soon, but as it is now, most consumers are focused on wireless networking.

Not having to stripe the drives also results in power savings because drives not being accessed can be spun down. Usually, only my 5 TB DVR drive and the parity drive are constantly spinning, but even these drives spin down at times. The other five drives can be spun down to save some power and reduce wear and tear. With parity, any single drive can fail and the data will not be lost. If I am really unlucky and two drives fail at once, only the data contained on those specific drives will be lost.

Enterprise Gear

The best performance-to-cost ratio can be achieved today by buying and repurposing older enterprise gear. This is especially worthwhile today because CPU architecture has not changed significantly since 2012. I really believe this is the sweet spot. My MacBook is a late 2013 model (the last MacBook with NVIDIA discrete graphics), and I built the desktop I use in 2012. The good news is these are far from obsolete! In some cases, the Intel i7-2600K still performs better than later generation CPUs.

Note: If you are building a desktop computer now, just use the latest generation parts. Consumer PC parts don't depreciate as nicely as enterprise parts.

If you look at Intel specs, you will see the improvements over the last few years are mostly concerning power savings. If you run a large server farm, it is worth replacing older servers. If you are a hobbyist or someone looking for one powerful server, buying gear from 2012 is a better route. The market is flooded with older enterprise gear, and as a result, prices are excellent. For instance, a pair of E5-2600K 8 core Xeons can be had for only $135 on eBay. This sold for $1,300 new. That pair easily blows a $300 consumer intel i7 4 core processor out of the water. If you shop around, you could do even better. Sometimes it is more economical to buy a 1U server and remove the motherboard and processors.

Some builders would even go with earlier generation server parts such as pre-2010, but I would strongly recommend against that. Sandy Bridge is itself a great jump in power and heat efficiency and it has important modern features such as AES v2.

Lastly, any device sold as a Network Attached Storage is overpriced these days. While simple to manage and sometimes the best solution, $400 only buys you a 2 drive device with a low power CPU. If you want more bang for your buck and you are okay tinkering, eBay is your friend. Enterprise gear will get you the most functionality for your dollar.

The Build

Objective: Build a multipurpose server to solve my previous storage mess. I set a budget for only $600 for the server. This made things a bit more tricky.

After a lot of research, I initially settled on a server that is still listed on eBay. The price was $550 for a dual E5-2660 with 6GB of RAM in the Rosewill case with a 600W PSU. I contacted the seller to negotiate an OBO price and the seller refused what I considered a reasonable offer and stated that his minimum (breakeven) price was $600. I then looked at the listing and noticed he raised the listing price to $625. I set out to build essentially the same server as the eBay listing for a better price. I managed to finish the server for only $574 and by building it myself I had more RAM, a much better PSU, and a spare 1U chassis with PSU to boot.

This build is based mostly on JDM_WAAAT's $470 dual Xeon build. Changes made are a better and more efficient power supply, E5-2660 CPUs instead of 2650, a bit more RAM, and a rackmount 15 bay case. The cost was a major driving force in parts considered. I was originally looking at a 2 drive consumer NAS that retails for $400. For a bit more, I was able to build a powerful multipurpose 15 bay server. Buying a 1U server to supply the motherboard and 2x E5-2660 Xeon CPUs was the key to making this project extremely economical. There are many ways to source parts if you are looking to save a bit of money.

Parts Used:

| Type | Item | Price |

|---|---|---|

| Motherboard and CPU | Chenbro RM13704 (2x E5-2660 w/ Intel mobo) | $300 w/ tax |

| RAM | 32GB DDR3 ECC Reg memory | $60 |

| PSU | EVGA 750W G2 80+ Gold | $75 |

| CPU cooler | ARTIC Alpine 20 Plus CO x2 | $33 |

| SATAIII cable | 12 pack | $9 |

| Case | Rosewill RSV-L4500 | $97 |

| Total: | $574 shipped |

I already had an unused SSD sitting in the box and my existing hard drives populated the array. The SSD drive is used as a cache and for VM and Docker storage. NAS devices are rarely sold with hard drives included, so if you are shopping for a storage solution and do not have hard drives, this would be an additional cost regardless of the route you choose. Because this server has 15 hard drive slots, you can save a lot of money by buying smaller hard drives in a greater quantity such as the 4TB drives where the $/TB is much better than the $/TB of dense 8TB drives. Often you will find it is cheapest to buy external hard drives and take the drive out of the case. You will want to thoroughly test this drive before removing the case so you can return a bad drive before voiding the warranty.

The unRAID OS

The unRAID Plus operating system added an additional $89 to the total cost. It was certainly worth it. I could have achieved an almost similar result with a hodgepodge of open source software but the time saved, clean user interface, and utility of the Operating System quickly pays for itself.

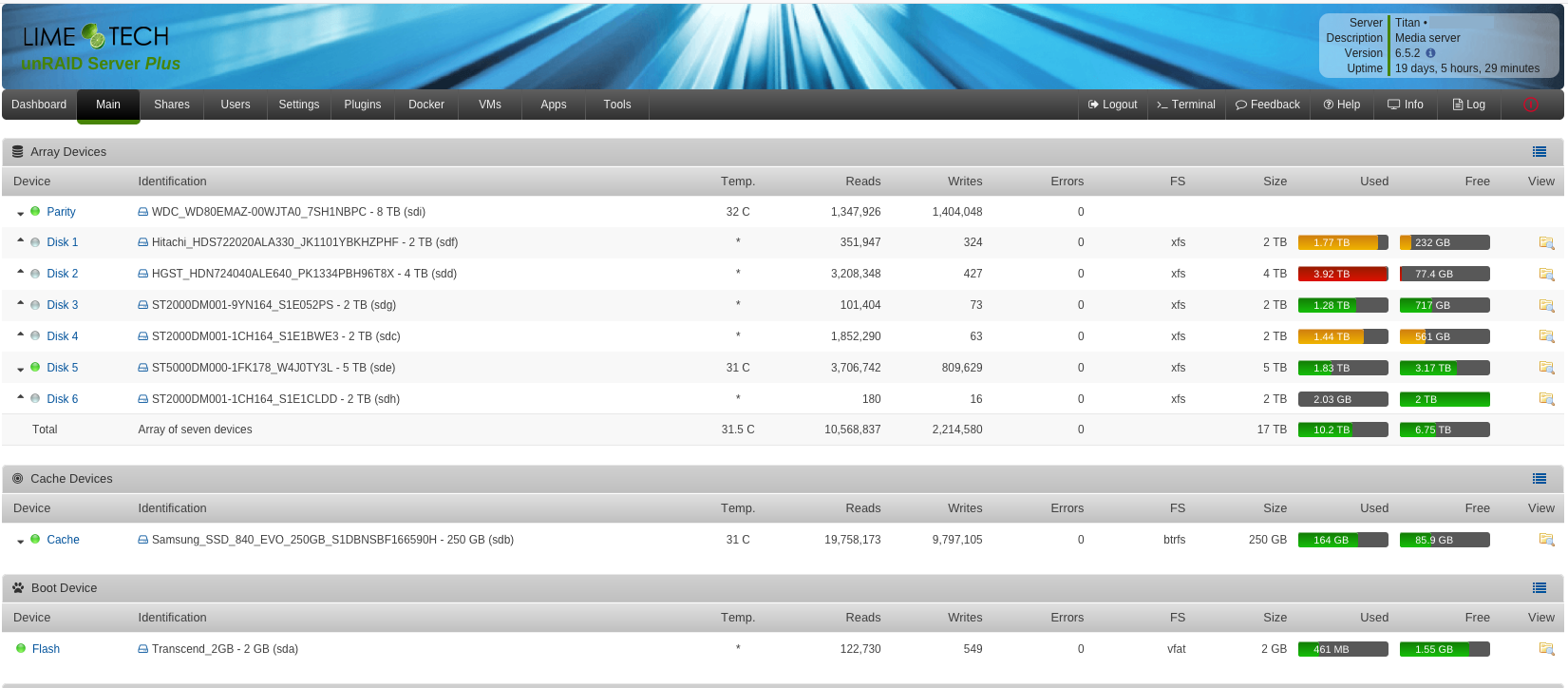

I now have 7 drives in the array for a total usable space of 17TB with 6.75TB currently free. I can add 4 additional hard drives whenever space becomes tight. If I ever get to that point and need more drives, I can add 4 more drives on top of that (for a total of 15) with the purchase of an unRAID Pro upgrade.

Virtual Machines

With 32GB of RAM, 16 cores, and 32 processing threads, the server has plenty of free compute resources to host virtual machines. The server currently has a pfSense VM acting as a router, a MacOS High Sierra VM (a virtualized Hackintosh!), a Windows 10 VM acting as a DVR, and some miscellaneous Ubuntu VMs. The pfSense router is quite nice as it segments the network, serves as a secure firewall, and the DNS server actually works. The existing ASUS router, despite being a nice top-of-the-line consumer router, has a weird bug where it does not respond to DNS queries from network clients when it does not have an internet connection. With pfSense, the DNS continues to function and FQDNs that exist on the Titan Server continue to function when the internet drops out, which is unusually common at this location. This means that the cloud instance and other services don't stop functioning when the internet goes bad.

Docker Containers

Docker is pretty awesome and unRAID provides a nice user interface for it. For readers unfamiliar with the term, a Docker container is a self-contained execution environment. Unlike a Virtual Machine where hardware is virtualized, Docker containers essentially virtualize the operating system. Docker containers have their own isolated CPU, memory, I/O, and network resources but they all share the kernel of the host operating system. They essentially feel like a virtual machine but eliminate the weight and overhead of running a guest operating system in a Virtual Machine. Docker is great for developers because you can essentially contain everything required for the application to operate: OS, python version, dependencies, etc. This feature itself has made building a server worth it. I've learned a lot about DevOps and managing servers and services. It is an excellent environment for experimenting with things that may eventually be moved to the public cloud. I plan to use this server to speed up the development of my live streaming platform.

Here's a list of containers currently running on the server:

- Nextcloud, Collabora, and MariaDB - these containers together enable our own private cloud. It is essentially a self-hosted Google Docs, Google Drive, and Google Hangouts alternative. This is a very handy way to use and share all that available hard drive space in the server. I also use the app on my phone to automatically upload new photographs to the server.

- Dasher - Intercepts signals from Amazon Dash buttons and converts them to MQTT. A hacked Dash button is the cheapest source for WiFi-enabled IOT switches. Instead of ordering product with a Dash button, I use it as a doorbell or light switch.

- Duplicati - Encrypted Backup of computers and specific unRAID shares to cloud storage.

- GitLab-CE - Self-hosted git for managing the code I write. Right now I am still mostly just using GitHub and GitLab.com.

- Firefox-sync - This is awesome. All my browsing history and bookmarks synced without having to trust that sensitive data with Google.

- Home Assistant - Home Automation with Python. Most people just install this on a Raspberry Pi.

- Metabase - A very slick front end for SQL for database analysis. The premise is "an easy, open source way for everyone in your company to ask questions and learn from data".

- Let's Encrypt - An NGINX reverse proxy and certbot combo for automatic SSL certificate management.

- MineOS - My young sister likes this one. I haven't really used this much.

- MQTT - This is MQTT in a Docker Container.

- OpenVPN AS - The OpenVPN access server. This will be decommissioned when I move VPN to the pfSense router.

- Plex - An awesome media server. Think Netflix for the movies you own and also for your home movies and video. Your media is available to stream to any device in the house and even over the internet.